How to use AI safely at work: and avoid getting fired for using ChatGPT

There is an old saying in tech: if you are not paying for a product, YOU are the product.

Unfortunately the latest tech revolution is no different: GenerativeAI providers like OpenAI, Microsoft, Google and Anthropic have all decided to collect your data by default.

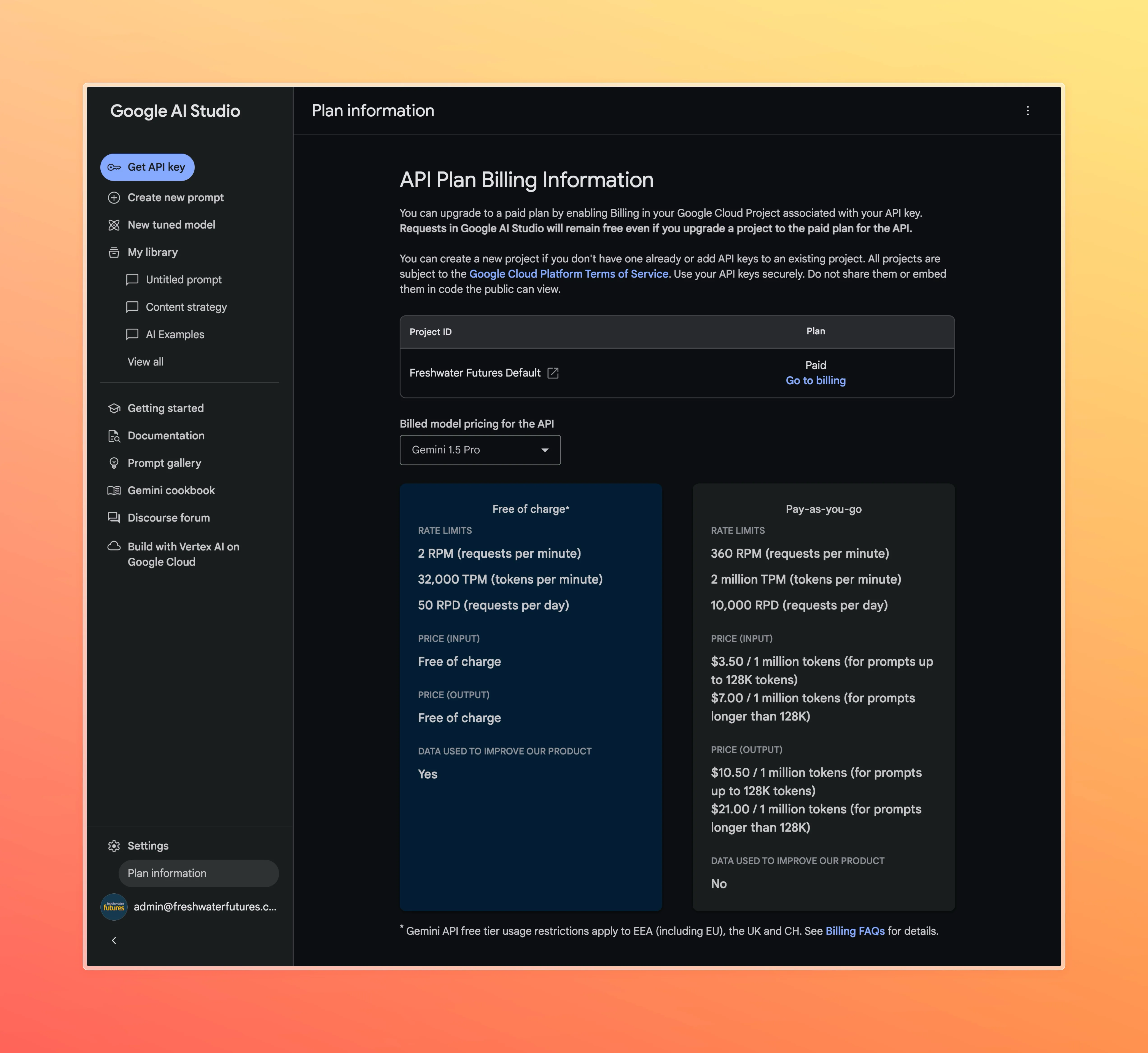

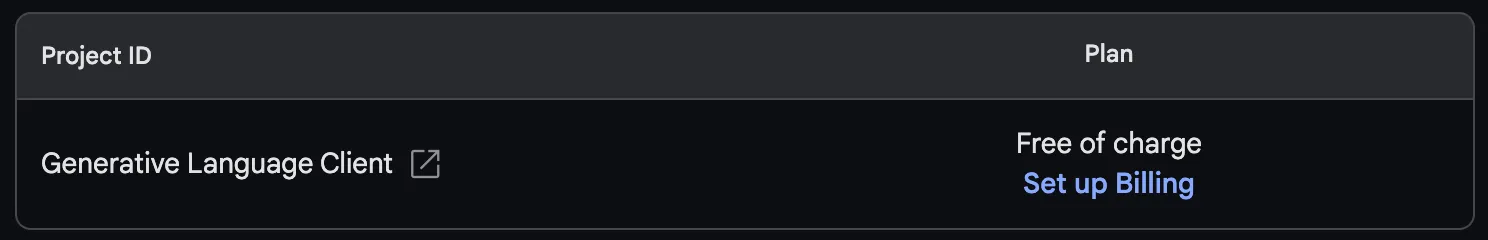

Here is a great demonstration of the addage “if you are not paying for a product, YOU are the product. “ in this case from Google’s aistudio product: The feature “Data used to improve our product” shows a yes for free and no for paid.

This practice raises serious concerns about data privacy and security. When companies use your interactions to improve their AI models, they potentially expose sensitive information, including proprietary business data and personal customer details. This can lead to intellectual property risks, compliance violations, and erosion of customer trust. In an era where data is a valuable asset, this 'free' model comes at a potentially high cost. Here is a great article on the risks for more info.

Some organisations respond to this by banning ChatGPT and their ilk, but this has many drawbacks: your staff might use it anyway, and you miss out on all the benefits while also frustrating your people.

A better way is to teach your staff how to use AI safely.

So here is :

The Ultimate Guide to using AI Safely at Work

Depending on what tool you are thinking of using the best approach might be different. So find your vendor below and review the tips for using them safely.

OpenAI & ChatGPT

OpenAI provides ChatGPT in the following ways:

For consumers: ChatGPT Free & Plus

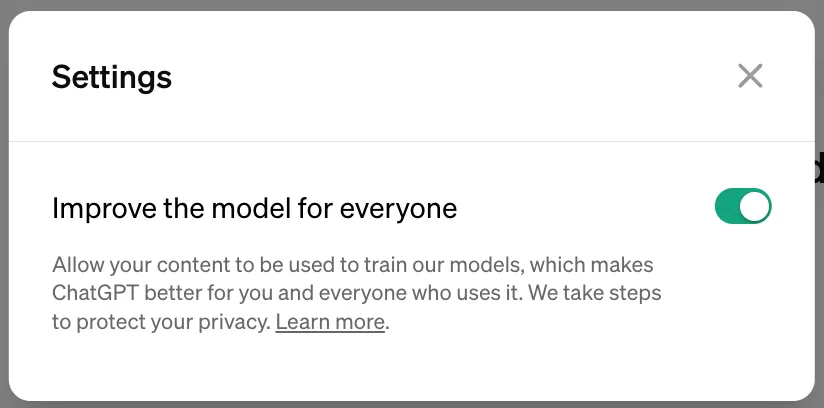

By default both “Free” and “Plus” accounts use your data for model improvement and can be seen by human reviewers.

Turn this off by turning off “Improve the model for everyone” in settings

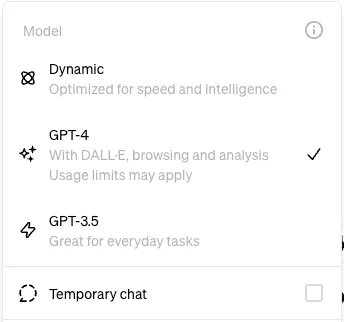

And for sensitive data turn on “temporary chat” to have the history deleted after 30 days

For business: ChatGPT Teams & Enterprise

- For an extra $5/month you can rest easy:

- By default your data is not used

For developers: via platform.openai.com or via the API

- By default your data is not used

- API and Playground requests will not be used to train their models. Learn more

Microsoft & Copilot

Microsoft provides their Copilot in the following ways:

For consumers: copilot.microsoft.com and bing.com/chat under these terms

Both “Free” and “Pro” accounts use your data for model improvement and can be seen by human reviewers.

You should not enter or upload any data you would not want reviewed.

To turn off use of your data, turn on commercial data protection (if you have a work or school account)

For business: inside Microsoft365.com under Microsoft’s general privacy terms - and more detailed info here

- Customer data is not used to train the foundational large language models that Copilot uses.

- Customer prompts and responses are not available to human abuse moderators (opted-out)

- However: Note that Microsoft seem to have pre-existing broad contractual access to your data for product improvement under their general privacy terms.

For developers as a cloud service via ai.azure.com under this Data Processing Agreement

- Azure OpenAI service:

- Human abuse review is on by default but you can request to opt-out here

- [Does MS use customer data?]

- Note that Microsoft seem to have pre-existing broad contractual access to your data for product improvement under their cloud data processing agreement.

Google & Gemini

Google provides their Gemini model in three main ways:

For consumers: gemini.google.com under these terms

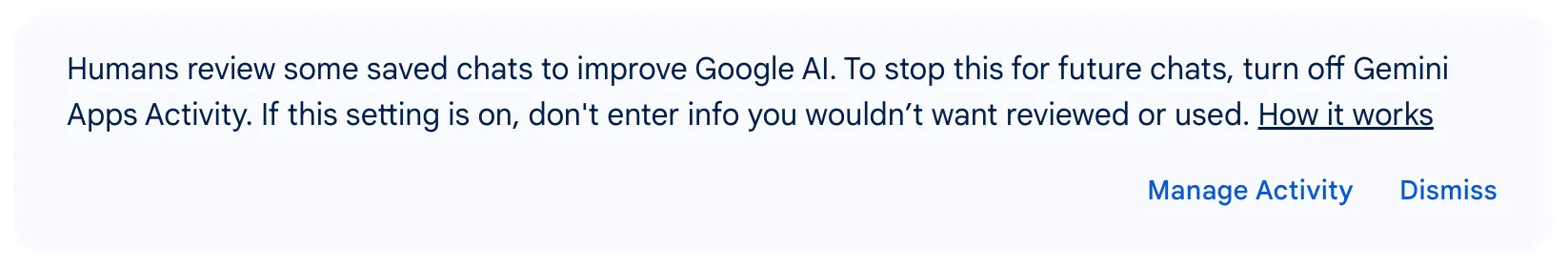

By default your data is used to improve the product and seen by human reviewers

To stop future conversations from being reviewed or used to improve Google machine-learning technologies, turn off Gemini Apps Activity.

At least they are upfront about it!

For enterprise and business via Google Cloud or inside Google Workspace under these terms

- By default your data is not used

For developers: aistudio.google.com using these terms

By default your data is used to improve the product and seen by human reviewers on the free plan

To stop future conversations from reviewed or used to improve Google’s machine learning technologies, turn on billing for your project.

Bonus: Google AI Studio usage (on aistudio.google.com) remains free of charge regardless of if you set up billing. Woohoo! Free private Gemini!!

Anthropic & Claude

Anthropic provides Claude in the following ways:

For consumers: via claude.ai under these terms.

- Unlike their competition, by default, conversations are not used to train the model

- Like Microsoft they can use your conversations to “improve their products”.

For developers: via API under these terms.

- By default, API calls are not used to train the model

- Anthropic explicitly states they may not train models on customer content from paid API services

Conclusion

Stay safe out there!

The AI revolution offers incredible opportunities, but it's crucial to use these tools wisely.

Remember, if you're not paying for the product, you might be the product. Even paid services require careful consideration of their data policies.

Opt out of data collection where possible, especially for sensitive information. For business-critical data, choose enterprise or API versions with stronger protections.

Educate your team and establish clear AI usage guidelines. By doing so, you can harness AI's potential while protecting your company's intellectual property and customer trust.

Stay informed, stay cautious, and most importantly, stay in control of your data. The future of AI is exciting – let's make it a secure one too!